The phrase “stranger danger” doesn’t only apply outside, but also in the online world. Besides your personal network, you are basically interacting in a virtual world filled with strangers. This digital world, bustling with individuals you may have never met in person, demands caution and vigilance.

The risk of online threats is not confined to children alone; it affects individuals of all ages. No one is exempt from the potential dangers lurking in the digital sphere, making it imperative for everyone to be mindful and cautious about their online interactions.

To fortify the safety and security of an online community, leveraging content moderation services emerges as one of the most potent defenses. Content moderation serves as a proactive approach to filter, review, and control the influx of user-generated content, safeguarding the community from harmful, offensive, or inappropriate materials.

Let’s dive into how content moderation as a service safeguards you from online threats.

Table of Contents

1. Profile Checking

More and more people are joining the online sphere. More people means more profiles. Not to mention that a single person can have multiple accounts.

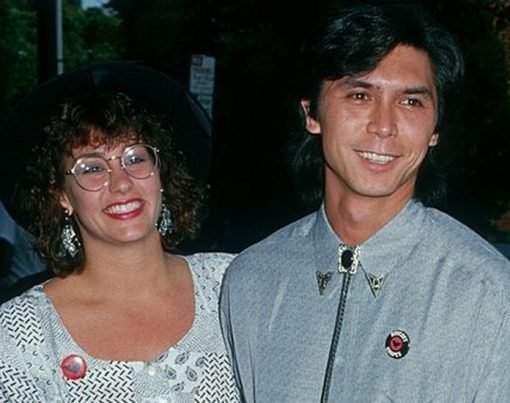

As this trend of increasing online presence continues, the adoption of profile moderation and image moderation service becomes absolutely crucial. Profile moderation serves as a valuable measure to detect and address any inconsistencies or suspicious activities present in user bios. On the other hand, image moderation plays a vital role in verifying the genuineness of the visuals shared by users.

2. Fraud Detection

Internet anonymity is a double-edged blade. On the one hand, it protects people’s identity. But on the other hand, it enables bad actors to commit all kinds of fraud on unsuspecting victims.

Among the several kinds of fraudulent activities, here are three of the most common:

● Phishing

Akin to a fisherman casting a line with bait, a fraudster replicates an email from a reputable company and sends it to a recipient. The email aims to gain the user’s trust, hence the bait. The email usually contains a link leading to a fraudulent website or a program that steals the victim’s personal information.

● Malware

Short for malicious software, malware is a program intentionally created to disrupt a computer’s functions. It can do all sorts of damage such as steal sensitive information, leak personal information, and sabotage your computer’s security.

● Online Romance Scams

In connection to fake profiles, an online romance scam happens when a bad actor adopts a fake online identity to gain another user’s affection and trust. Once the scammer has the victim’s trust, the scammer pretends to be in trouble and asks for financial support. Without any kind of intervention, this scheme can continue for years.

As technology gets more advanced, so do bad actors and their modes of operation. Content moderation solutions such as image moderation services checks are a big help in shielding you from fraud.

By incorporating content moderation mechanisms, platforms can effectively identify and eliminate malicious content before it reaches unsuspecting users. Whether it’s scrutinizing user profiles for inconsistencies, verifying the authenticity of listings, or analyzing links for potential threats, these solutions act as a vigilant gatekeeper, fortifying the digital ecosystem against harmful elements.

3. Prevents Cyberbullying

Content moderators play a pivotal role beyond content filtering; they also act as skilled mediators in handling online conflicts, particularly when individuals face harassment. This aspect of their role becomes especially crucial in creating a safe and supportive online community.

In instances of online harassment, moderators swiftly intervene to address the situation effectively. Their prompt action involves identifying the harassers and implementing appropriate measures to ensure that such behavior is curbed. This may involve issuing warnings, temporary suspensions, or even permanent bans, depending on the severity of the offense and platform guidelines.

4. Detect Hate Speech

Another downside of anonymity is that it allows people to spout hurtful words without care. Misogyny, homophobia, anti-semitism, racism, and other kinds of hate speech can become rampant if not watched out for.

Unfortunately, it takes just one toxic individual to transform an otherwise vibrant comment section into a toxic cesspool. The negative impact of hate speech can be far-reaching, affecting the well-being and emotional safety of those targeted by such content.

To keep up with the amount of content created every day, human moderators employ the aid of artificial intelligence (AI). Provided with a database of inappropriate keywords and phrases, content containing these keywords will be automatically flagged and sent to the moderator for review.

The utilization of AI technology such as AI image moderation is instrumental in handling the immense volume of content uploaded every day. It empowers human moderators to focus their attention on the most critical aspects of content curation, such as assessing context and ensuring fair judgment in complex situations.

Ensure Swift and Effective Response

By enlisting the help of content moderation service providers, online platforms can create a conducive environment for open discussions and interactions while deterring malicious intent. It bolsters trust among community members and fosters a sense of belonging by ensuring that the shared content aligns with the community’s values and guidelines.

Embracing content moderation services emerges as a formidable measure to fortify the safety and integrity of an online community. As we traverse the digital landscape, it becomes essential to be cautious and proactive in protecting ourselves and those around us from potential risks that come with engaging in the vast world of strangers online.